How Kubernetes can make your high load project viable in 2020

Kubernetes is an open source system for the automatic deployment, scaling, and management of containerized applications

We share insights into Kubernetes, discussing its role in container orchestration, its features, and why it's become a critical tool for managing complex cloud-native applications. The article covers best practices and the future of Kubernetes in the cloud ecosystem.

Kubernetes is an open-source container orchestration system that is designed to help you build a scalable infrastructure using high load approaches on a weak server. In this article, we’ll show you why Kubernetes is worth using in 2020.

Let's suppose you have a small development team working on a project that is not yet large-scale. It does not require multiple, powerful servers, and the updates are rolled out manually. Even if the production server is unavailable for several minutes due to malfunctions, there are no severe consequences. So you decide to wait until it is absolutely necessary to build an appropriately-sized infrastructure that can support the eventual high load.

We have had multiple clients come to us asking for help, due to this exact scenario. Because they did not build the right infrastructure ahead of time, they lost millions within a minute of service downtime due to infrastructure failures.

Very often, this important aspect of the project is left for the future, because of its supposed complexity and the potentially high cost of creating and maintaining the necessary infrastructure. But with an individual approach, you can put everything you need into the project infrastructure at the initial stages. At Evrone, when we begin development of a new project, we start applying high load approaches the first day.

How Kubernetes works

Docker allows you to easily separate the application from the hardware and deploy the app. Kubernetes makes this process even more convenient, as it allows you to work with a set of Docker images in batches, run them on a large number of virtual servers, and group, manage, and configure them according to existing tasks. In other words, Kubernetes facilitates easy scaling as soon as the number of services starts to grow, significantly saving you time, money, and manpower.

Kubernetes performs equally well both locally and in the cloud. Amazon WS, Microsoft Azure, Google Cloud, Yandex Cloud, Mail.ru Cloud, Alibaba Cloud, Huawei Cloud support it out of the box. However, many people are wary of using Kubernetes, since it has developed an unwarranted reputation for being overly complicated and expensive. We’re going to address these misconceptions and show you the benefits of Kubernetes for your business.

Is Kubernetes difficult to use?

Kubernetes offers extensive documentation and a wealth of community experience. You can use the Helm package manager (similar to Apt and Yum) to significantly simplify deployment. Helm uses “charts”, or data sets, to create an instance of the application in the Kubernetes cluster.

There are many ready-to-use chart templates, and you can use an existing solution from Kubeapps, the application directory for the Kubernetes infrastructure. This allows you to configure your app deployment with just one button. You can also create your own chart, but in most cases, there will already be a chart that is suitable for your needs. If a chart does not fully meet your requirements, then it is easy to write your own, using the existing chart as a reference.

Is Kubernetes expensive?

Since Kubernetes uses nodes, or machines in a cluster, that contain many components and require vast resources, many people believe that it is prohibitively expensive. The minimum comfortable configuration starts with five to six machines with dual-core CPUs and 4 GB of RAM. For highly loaded services, the recommended RAM on the master node rises to 8 GB. But this does not correlate with the concept we stated earlier - using high load approaches on a weak server. This was made possible by the development of K3S, a lightweight Kubernetes distribution that drastically reduces the minimum infrastructure requirements.

K3S distribution was released in February 2019, and we began using it in projects where server capacity was insufficient. The K3S developers managed to “squeeze”, or make smaller, Kubernetes an impressive five times. As a result, the binary was reduced to only 40 MB, and the required memory starts at 512 MB, while consuming about 75 MB on the working node. K3S minimized the requirements for Kubernetes so much that can even be run on the Raspberry Pi. So running it on a standard company server is no challenge at all.

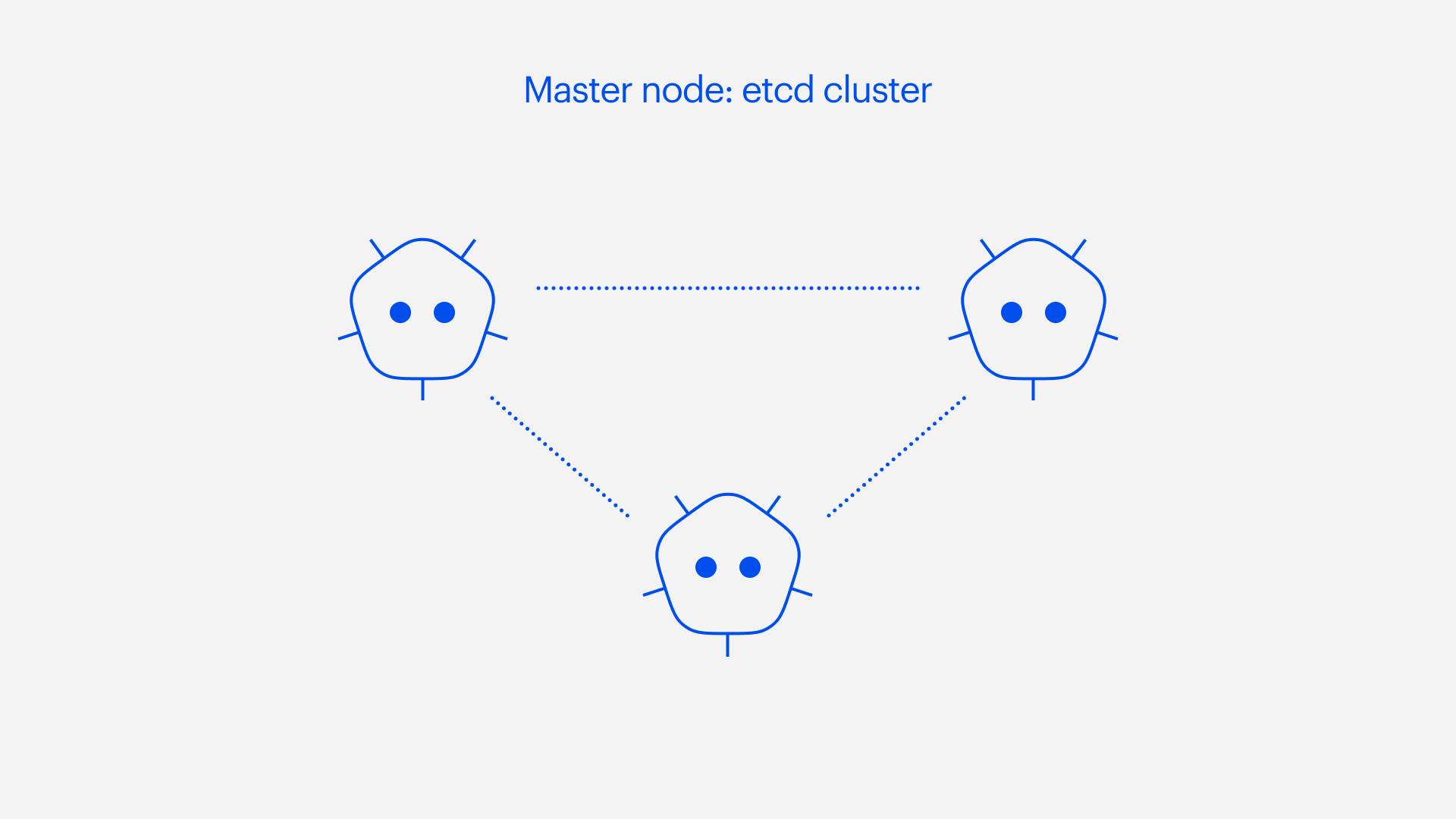

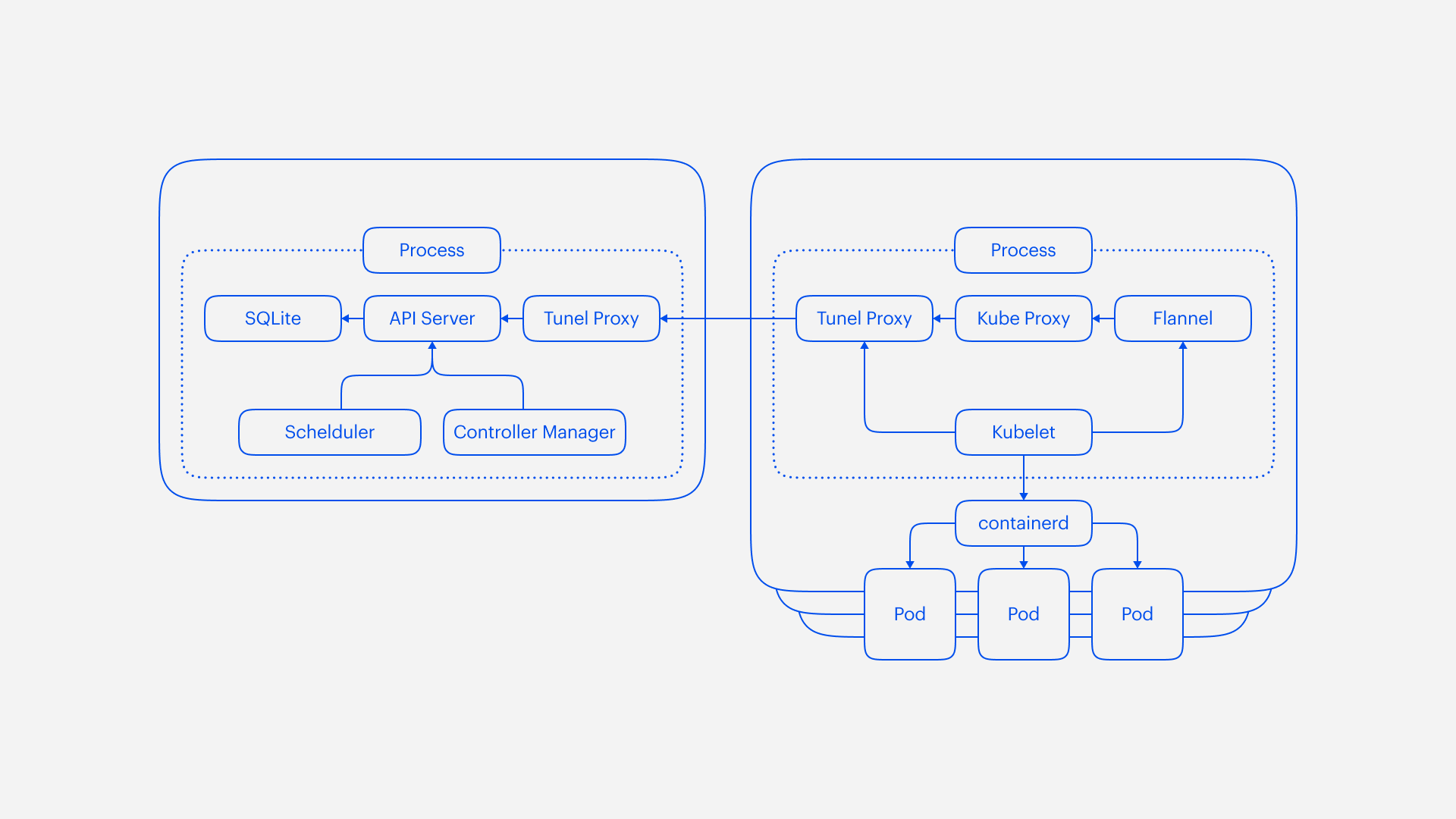

You’re probably wondering how the K3S developers manage to reduce Kubernetes’s “appetite” by so much. If you look closely at the composition of Kubernetes, you can see that the most resource-intensive components include distributed ETCD storage and the Cloud-Controller Manager. In a situation where weak local hardware must be used, a cloud controller is unnecessary. Moreover, the cloud services already have their own Kubernetes managers. So this component can be eliminated. As for the ETCD storage, it can be replaced with SQlite, or any other database from the list of supported ones.

The K3S distribution is represented by the following diagram:

The result is a straightforward solution, which, if you go all the way to the end, might not use Docker at all. By default, the system works with ContainerId, which was once a part of Docker, but now works as a standalone solution that implements an executable environment for launching containers. ContainerId was designed to be simple, reliable, and portable. However, K3S is highly flexible, and Docker can also be used as a containerization environment to further facilitate the move to the cloud.

How we use Kubernetes at Evrone

We began working with Kubernetes three years ago when we were developing the Vexor project, a CI/CD service that grew from an internal product into a commercial solution. Kubernetes made it possible to integrate the entire architecture, which used to run on several virtual machines, into a single environment with scalable resources.

Since the services inside the product were now weakly connected and the data was exchanged through the RabbitMQ message broker, we could easily migrate containers from one machine to another depending on the required resources. Kubernetes conveniently took care of all the mechanisms of migration, scheduling, routing of requests, and monitoring the life cycle of services.

Now we launch all new projects in the Kubernetes environment, regardless of their size and complexity, and we’ve also transferred parts of existing projects to this stack from other containerization solutions, such as docker-swarm and Nomad.

At Evrone, we have had great experiences deploying Kubernetes infrastructure on bare-metal servers. This method is a little more complicated than doing it in the cloud, because you are forced to work specific hardware, rather than ordering a typical server with a standard configuration. So it requires you to solve the issues of balancing traffic, adding new nodes to the cluster, allocating disk space for permanent data storage, etc.

There are also projects where it's necessary to deploy the Kubernetes environment in single-node mode (on one bare-metal machine) in a secure circuit, accessible exclusively via VPN, with external traffic available only through HTTP-proxy.

In this situation, working with a critically small amount of resources, we are deploying a cluster based on a certified K3S distribution. This allows you to work productively on, let's say, a rather weak machine. It also makes it possible to migrate to a large cluster, without rewriting the entire configuration stack.

Scaling with Kubernetes

It’s worth it to take future scaling into account from the very beginning of the project. Containerization has become an efficient way to ensure that your project will have room to grow. Kubernetes is becoming increasingly popular and is compatible with almost all large cloud platforms, makes it easy to scale the project (if it is initially packed in containers), using a horizontal or vertical approach.

With horizontal scaling, the number of replicas of loaded services automatically increases, allowing you to parallelize traffic between several models and reduce the load on each of them. In this case, as soon as the load drops, the number of replicas is diminished and the resources they occupied are freed.

Vertical scaling is a little more complex. Depending on the resource consumption of the application, more efficient servers are allocated, while the underloaded ones are disabled. However, it is difficult to determine the necessary hardware requirements. So you may end up either paying more for overly powerful servers, or not take into account peak loads and "fall" at the most crucial moment.

Getting started with Kubernetes

If you do not take future growth into account at the start of your project, eventually your developers will be faced with the challenge of quickly scaling a high load project which is filled with users and generates profit. According to the available statistics, you can experience significant losses from just one minute of downtime. In this situation, your service may be disabled for hours. Our Kubernetes approach guarantees that scaling can be done quickly and smoothly with minimal service disruptions.

For more information about working with Kubernetes, you can watch this report by Alexander Kirillov, Evrone’s CTO, at the DevOps meetup at the Metaconf conference held by our company.

Kubernetes helps us to manage complex clusters of dozens of virtual machines, deploy the necessary microservices in that environment and scale them to different loads. There is a lot of tools written for Kubernetes that help DevOps specialists to work faster, and provide the projects with stable working environment.